Learning with our peers: peer-led versus instructor-led debriefing for simulated crises, a randomized controlled trial

Article information

Abstract

Background

Although peer-assisted learning is known to be effective for reciprocal learning in medical education, it has been understudied in simulation. We aimed to assess the effectiveness of peer-led compared to instructor-led debriefing for non-technical skill development in simulated crisis scenarios.

Methods

Sixty-one undergraduate medical students were randomized into the control group (instructor-led debriefing) or an intervention group (peer debriefer or peer debriefee group). After the pre-test simulation, the participants underwent two more simulation scenarios, each followed by a debriefing session. After the second debriefing session, the participants underwent an immediate post-test simulation on the same day and a retention post-test simulation two months later. Non-technical skills for the pre-test, immediate post-test, and retention tests were assessed by two blinded raters using the Ottawa Global Rating Scale (OGRS).

Results

The participants’ non-technical skill performance significantly improved in all groups from the pre-test to the immediate post-test, with changes in the OGRS scores of 15.0 (95% CI [11.4, 18.7]) in the instructor-led group, 15.3 (11.5, 19.0) in the peer-debriefer group, and 17.6 (13.9, 21.4) in the peer-debriefee group. No significant differences in performance were found, after adjusting for the year of medical school training, among debriefing modalities (P = 0.147) or between the immediate post-test and retention test (P = 0.358).

Conclusions

Peer-led debriefing was as effective as instructor-led debriefing at improving undergraduate medical students’ non-technical skill performance in simulated crisis situations. Peer debriefers also improved their simulated clinical skills. The peer debriefing model is a feasible alternative to the traditional, costlier instructor model.

Introduction

Full-scale, high-fidelity, manikin-based simulation is recognized as a powerful educational tool for developing the skills and competencies of healthcare professionals [1]. This modality of simulation is particularly useful for teaching critical care skills [2] and the principles of crisis resource management (CRM) [3]. Various studies have demonstrated the efficacy of manikin-based simulation in teaching non-technical skills, such as communication and teamwork, and improving patient-level outcomes [4]. Poor development of these non-technical skills increases the likelihood of human error and threatens patient safety, particularly in crisis situations. In a simulated environment, clinical skills can be safely practiced and assessed without risk to patients [5]. Furthermore, studies have shown that technical skills are correlated with non-technical skills, i.e., that when non-technical skills improve technical skills improve as well and vice-versa [6].

In simulation-based medical education, debriefing, “the feedback process that encourages learners to reflect on their performance” [7], is key for the success of experiential learning [8,9]. Debriefing is most commonly facilitated by an expert whose goal is to help learners identify and close gaps in their knowledge and skills in a safe learning environment. However, the wider implementation of simulation-based education may be limited by instructor availability and associated time costs [10].

Peer-assisted learning (PAL), in which trainees provide feedback to other trainees, is well-described in medical education, although data in the simulation literature is limited [11]. PAL creates a low-risk, informal environment in which feedback is provided by individuals at similar cognitive phases of skill acquisition and thus encourages reciprocity in learning. The potential benefits of PAL include increased accountability, critical thinking, increased self-disclosure (i.e., accepting vulnerability discussing their own performance challenges), and a reduced need for instructor availability [11]. Self-debriefing [7] and within-team debriefing [12] have been shown to be effective alternatives to instructor-led debriefing for CRM skill development in a simulation environment. Previous studies of peer debriefing have mainly assessed learners’ acceptance and satisfaction [13]. Our study aimed to compare the effect on learning between participating as a peer debriefer, and participating as a debriefee of a peer or instructor (control). To the best of our knowledge, no previous study has examined the effects that being a peer debriefer have on learning. Considering that teaching has been described as one of the most effective strategies for learning [14], we hypothesized that peer debriefers would acquire the same level of CRM skills as the peers that they debriefed and the control group. We also hypothesized that skill retention would be superior in the peer debriefer group.

Materials and Methods

Study population and orientation

Institutional Research Ethics Board approval was obtained (20120938-01H) from the Centre Hospitalier Universitaire de Brest in France and Ottawa in Canada and was conducted in accordance with the Helsinki Declaration-2013. Fourth- and fifth-year medical students from the University of Brest were recruited for this study. Informed consent was obtained from all the participants. To preclude unwanted divulgence of study details among potential participants, a confidentiality agreement was also obtained. All participants underwent a 30-min orientation to familiarize themselves with the key concepts and simulation equipment. They were shown a standardized teaching video on the principles of patient simulation, CRM, and non-technical skills [15]. They were then introduced to the Laerdal SimMan 3G simulator manikin (Laerdal Medical France), monitors (Laerdal Medical France) and the simulation room. All participants completed a demographic questionnaire after their orientation and were recruited in 2013.

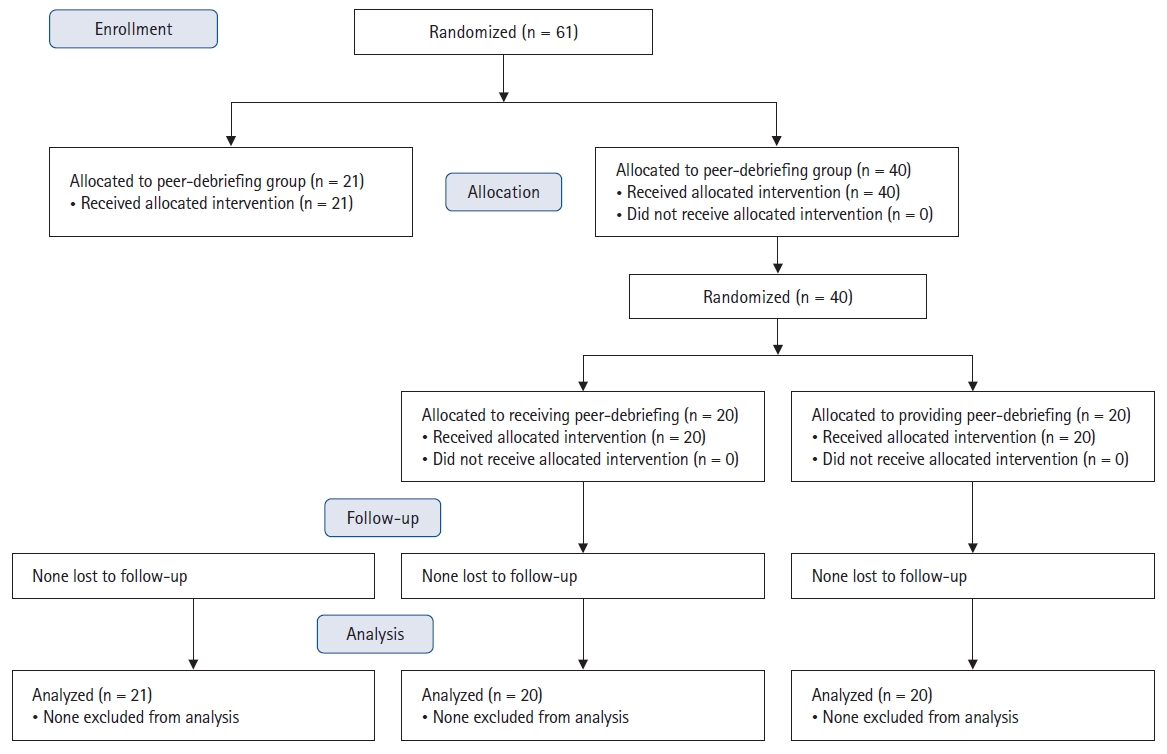

Study design

This study had a prospective, randomized, controlled, repeated-measures design. After orientation, all participants individually participated in a high-fidelity simulated hypotensive crisis scenario (pre-test). The study day was randomized to either be a control day (instructor group) or an intervention day (debriefer/debriefee groups) and allocation was based on the availability of the participant for scheduling on particular days. Participants in the control group (n = 21) individually completed two subsequent simulation scenarios, each followed by instructor-led debriefing. The participants in the peer debriefing group (n = 40) were randomized into either the peer debreifee/learner (PL) or the peer debriefer/teacher (PT) group using sealed envelopes, with stratification according to year, so that participants were paired with another participant of the same year (Fig. 1). The PL then completed two successive simulated crisis scenarios, followed by a peer-led debriefing. PTs only observed their peers; they did not manage the scenarios themselves. The PT used a debriefing form developed from the best practice guide. The guide was structured around three aspects: (i) actions observed, (ii) use of good judgement, and (iii) exploring and closing the gaps observed [16,17]. This orientation was used to guide the debriefing. In the second scenario, partner pairings were randomly changed to ensure that the participants were not debriefed twice by the same peer. The debriefing session was limited to 20 min for all groups. After the second debriefing, all participants, including peer debriefers, participated independently in an immediate post-test scenario, followed by a retention post-test two months later.

Two trained confederates (i.e., actors playing the role of other healthcare professionals in the senarios) were used for each scenario. Their roles were scripted, and they only performed tasks when directed by the participant as it was critical that the confederates avoid offering crisis management advice. The pre-test, immediate post-test, and retention post-test scenarios simulated intra-abdominal hemorrhagic shock, whereas the two training scenarios simulated anaphylactic and septic shock in a random order. Each scenario lasted for five minutes. To evaluate skill performance, all scenarios were video recorded and overlaid with the patient’s vital signs before being sent to the video raters.

Simulation scenarios

The scenarios were created and determined to be of comparable difficulty through an iterative review by emergency simulation faculty and students. Shock was chosen as the theme because it is a realistic critical situation that students may need to manage in the first few minutes in actual clinical scenarios. These scenarios encompass many standardized procedures and decision-making processes. All non-technical CRM skills can be assessed during these short clinical scenarios. To avoid sequencing effects, the scenario order was randomized for each participant and equally allocated between the groups.

Outcome measures

Our primary outcome was the change in non-technical skill performance of peer debriefers, as measured by the Ottawa Global Rating Scale (OGRS). The secondary outcome was the relative effectiveness of receiving instructor-led debriefing versus being a peer debriefee versus being a peer debriefer on non-technical skill performance in a CRM simulation session. The assessment was performed immediately after debriefing and again two months later. The OGRS has been shown to be a valid and reliable tool for assessing emergency physicians’ non-technical skills [18]. The OGRS encompasses five main skill categories: leadership, problem solving, situational awareness, resource utilization, and communication. Each category is scored out of seven, with seven being the maximum and one being the minimum score. Several behavioral descriptors are included for each category to assist the scorer in selecting the most appropriate score. For instance, a lower leadership score would be represented by “loses calm and control during most of the crisis; unable to make firm decisions, etc.,” whereas a higher score corresponds to “remains calm and in control during the entire crisis; makes prompt and firm decisions without delay, etc.” The scores were summed to provide a total OGRS score ranging from 5 to 35 [18].

Two raters with extensive experience in simulation and CRM principles were trained to evaluate all video data collected from the participants. Raters’ training consisted of (i) familiarization with the OGRS, (ii) scoring of 12 training videos on simulated crises comparable to those used in the current study, and (iii) discussion of the individual ratings of these videos as a group until the raters and principal investigator reached a consensus. The training process for the raters took six hours, and validation was established when the raters reached substantial agreement on their ratings of the training videos. Once the desired inter-rater reliability was reached, the two raters rated the data videos independently in random order, blinded to the participants’ randomization, test phase, and level of training. Of note, only the raters were asked to score participants’ performances. During the debriefing, the debriefers (either instructor or peer debriefers) made comments or asked participants questions to foster reflection in their minds, but they did not score their performance using the OGRS.

Statistical analysis

Demographic data were analyzed using the chi-square and Mann-Whitney U tests. Inter-rater reliability was assessed using the intraclass correlation coefficient of the total OGRS score. An a priori 2-sided P value of 0.05 was used for all statistical comparisons. SPSS (version 23.0) was used for all analyses.

We used a repeated-measures general linear model (GLM), accounting for the covariate of the participant’s level of training (year 4 or 5). For the main analysis of the primary outcome measure, the total OGRS score was treated as the dependent variable. The independent variables were the test phase (pre-test vs. post-test vs. retention-test) for the within-subject analysis and the type of debriefing (peer debriefer vs. peer debriefee vs. instructor debriefing) for the between-subject analysis. We also controlled for the level of undergraduate training by including it as a covariate in the GLM. This approach allowed us to compare the effects of the type of debriefing and the test phase on skill performance. Moreover, we were able to examine interactions between these three variables in cases for which pre-test performance was found to be significantly different between the two groups. We used the estimated marginal means to compare groups for the pre-test, post-test, and retention tests. Sidak’s test was used for post hoc comparisons.

As previously published data using the OGRS have referred to post-graduate trainees, pilot data for undergraduates were not available at the time of study planning. Therefore, we performed a sample size calculation based on the expected effect size. In the fields of psychology and education, a Cohen’s f effect size > 0.4 is considered large and acceptable for a given teaching intervention. Therefore, based on the analysis described above, we relied on an F-test to calculate the sample size using G*Power software (version 3.12). We calculated a total sample size of 45 based on an effect size of 0.4, a 2-tailed α of 0.05, a power of 0.80, three groups and three timepoints for measurements, and a correlation among repeated measures of 0.5. We thus intended to recruit 60 participants to allow for 25% attrition at the time of the retention test, equating to 20 participants per group.

Results

Demographics

Sixty-one participants were recruited and completed the study. A summary of the participants’ characteristics is presented in Table 1. A significant difference in the medical school year was found between the groups; therefore, we added this covariate to the analysis.

Inter-rater reliability

We achieved an almost perfect overall inter-rater reliability for the total OGRS score of 0.95 (P < 0.001). Due to the high level of agreement among the raters, the mean OGRS score was used for the analysis.

Primary outcome

The peer debriefers demonstrated a statistically significant improvement in their total OGRS scores between the pre-test and post-test and between the pre-test and retention test (P < 0.001). The overall OGRS scores also significantly improved between the pre-test and post-test and between the pre-test and retention test for the control group and peer debriefees (P < 0.001). For the pre-test, post-test, and retention-test phases, no significant differences in the mean total OGRS scores were found among the debriefing modalities (P = 0.147) when the year of medical school training covariate was accounted for.

Estimated marginal means for the change in total OGRS score between the pre-test and post-test were 15.0 (95% CI [11.4, 18.7]) for the instructor debriefing group, 15.3 (95% CI [11.5, 19.0]) for the debriefer group, and 17.6 (95% CI [13.9, 21.4]) for the debriefee group. Estimated marginal means for the change in the total OGRS score between the pre-test and retention test were 12.8 (95% CI [9.1, 16.4]) for the instructor debriefing group, 14.8 (95% CI [11.0, 18.6]) for the debriefer group, and 16.3 (95% CI [12.6, 20.1]) for the debriefee group. No significant differences in performance were found between the post-test and retention test (P = 0.358).

Of note, for the main analysis, the normality was non-significant (P > 0.05) based on the Kolmogorov-Smirnov test, except for the peer-debriefee group and only for the pre-test (P = 0.039). The sphericity assumption was not violated (Mauchly’s W test) and was not significant (P > 0.95).

These results indicate that participants experienced significant and comparable improvement from pre-test to post-test, irrespective of the intervention (instructor vs. peer debriefing), and that the improvement was retained for at least two months (Table 2).

Discussion

Our study aimed to investigate the relative efficacy of participating as a peer debriefer compared with receiving peer- or instructor-led debriefing on non-technical skill performance during CRM. Our results demonstrate that non-technical skill performance during simulated crisis scenarios improved for peer debriefers similarly to that for participants who received instructor debriefing. Non-technical skill performance improved regardless of the debriefing modality and was retained for at least two months.

This lack of difference in non-technical skill performance improvement between peer debriefers and participants who received instructor- or peer-led debriefing highlights the educational benefit of PAL to both teachers and learners. Active engagement through reviewing peers may facilitate student learning [19]. Furthermore, trainees’ involvement in feedback and assessment processes may contribute to the development of facilitation and communication skills, lifelong learning competencies, and critical thinking and reflection [20]. This result aligns with other randomized controlled trials’ findings that observing a simulated performance is effective for students’ learning of crisis management skills to the same extent as participants who are active in the simulation as long as observers actively participate in the debriefing process [21,22]. Our study adds more evidence to the literature, showing that debriefing is a critical component of learning in simulation-based education.

Regardless of group allocation, students’ performance did not decline after two months. This suggests that, after three short scenarios, the participants achieved a high level of performance of non-technical skills for managing the first five minutes of a crisis situation, even in the two groups without an instructor. Of note, we did not examine the content of the debriefings, and peer assessors may utilize a different approach to debriefing than instructors [20]. Moreover, faculty and peer assessors do not apply the same strategies to assess and observe situations. Students may have different interests when observing the performance of their peers, and matching and modeling peer performance could be a powerful tool to help students bridge the gap between the learning and application contexts [23]. However, analyzing qualitative differences in debriefing styles between participants and instructors [24] was beyond the scope of this study but could be the subject of future investigations. The retention test, similar to the pre- and post-tests, consisted of a hypotensive shock scenario. Therefore, future studies should determine whether peer debriefing is as effective as instructor debriefing when learned non-technical skills are transferred to other CRM scenarios. Given the homogeneity of the scenarios, it could also be argued that any improvement in non-technical skills across all three groups was the result of practice alone rather than from benefits derived from debriefing. First, we assessed non-technical skills as opposed to knowledge of medical management. Second, we did not include a control group of participants who completed scenarios without feedback. A previous study showed that residents who managed simulation scenarios without debriefing did not demonstrate improvements in their non-technical skills [8]. Therefore, we decided to compare peer debriefing with the currently accepted gold standard of instructor debriefing. Overall, our results challenge the traditional view that expert instructors must facilitate simulation debriefing. Although our study was not statistically powered to conclude that both peer-debriefer and peer-debriefee strategies were as effective as instructor debriefing, our results suggest that PAL strategies may be a valuable adjunctive tool to expert-facilitated simulation debriefing. The scores for the non-technical skills studied were high. This indicates that the participants improved quickly and developed a high level of fundamental skills. This is particularly interesting considering that students in undergraduate courses have been found to feel uncomfortable giving feedback to others as they consider themselves not competent enough to assess their peers [25]. None of the students had experience leading a crisis situation; thus, for most of them, this was an opportunity to experience shock in its multiple dimensions for the first time. The debriefers focused on two new tasks: both educational and medical learning. We are not suggesting that having an expert instructor present for debriefing is not necessary [26]; however, we recommend considering when it is the most essential. Established alternatives to instructor debriefing (self-debriefing, within-team debriefing) [7,12,27] should encourage further development in curriculum design.

Our study had several limitations. Despite a retention of 100% of the participants, a relatively small number of fourth- and fifth-year undergraduate medical students from the University of Brest were enrolled in this single-center study. Since medical curricula and resultant attitudes towards simulation vary across institutions, our findings may not be generalizable to all medical students or higher-level trainees such as residents. In our study, only peer debriefers observed their peers completing two scenarios following the pre-test; none of the other participants had the opportunity to observe their peers in the hot seat. Accordingly, peer debriefers had less time to practice, which may explain the non-superiority of this group in post-test and retention test performance. In addition, we cannot determine whether the impact of the peer debriefers’ assessments on the learning of non-technical skills was due to the observation of their peers, facilitation of debriefing, or both. Finally, the implications of our study may vary across countries and organizations. While most centers in North America need to compensate instructors for their time, other countries or centers may pay instructors salaries. Additionally, despite the significant upfront costs to purchasing a manikin for simulation, it can be used virtually 24/7 and lasts for many years. Therefore, the recurrent cost of instructors’ time is the main barrier to conducting simulations in centers that need to pay for instructors.

In conclusion, all participants’ performance of non-technical skills in simulated crisis scenarios, as measured by the OGRS, improved and was retained for at least two months in this study. We found no difference in the degree of improvement in the performance of non-technical skills among the participants, regardless of whether they provided debriefing to their peers or were debriefed by a peer or instructor. These findings suggest the potential role of PAL in teaching non-technical skills in CRM. The use of peer assessment may help offset the limited availability of expert instructors, which is a barrier to the wider implementation of simulation-based medical education. Moreover, the educational benefit for peer debriefers demonstrated in our study makes a compelling argument for the incorporation of peer assessment activities into medical curricula. This is consistent with data from previous randomized controlled trials investigating alternative approaches to instructor debriefing, and PAL may play a role alongside the gold-standard of expert instructor debriefing.

Acknowledgements

We would like to thank Guy Bescond, Julien Cabon, and Sophie Fleureau for their technical support during the simulation sessions and the confederates and medical students of the University of Brest (France) for their participation in this study. We would also like to thank the Center de SIMulation en santé (CESIM) for their assistance with data collection and Ashlee-Ann Pigford and Hladkowicz for their administrative and technical support throughout the research project.

Notes

Funding

This study was supported by a research grant from the Academy of Innovation in Medical Education at the University of Ottawa, Canada. Dr. Boet was supported by The Ottawa Hospital Anesthesia Alternate Funds Association and the Faculty of Medicine, University of Ottawa with a Tier 2 clinical research chair.

Conflicts of Interest

No potential conflict of interest relevant to this article was reported.

Data Availability

The datasets generated during and/or analyzed during the current study are available from the corresponding author on reasonable request.

Author Contributions

Morgan Jaffrelot (Conceptualization; Data curation; Formal analysis; Funding acquisition; Investigation; Methodology; Project administration; Resources; Validation; Visualization; Writing – original draft; Writing – review & editing)

Sylvain Boet (Conceptualization; Formal analysis; Funding acquisition; Investigation; Methodology; Project administration; Resources; Validation; Visualization; Writing – original draft; Writing – review & editing)

Yolande Floch (Conceptualization; Resources; Writing – review & editing)

Nitan Garg (Investigation; Resources; Writing – review & editing)

Daniel Dubois (Investigation; Resources; Writing – review & editing)

Violaine Laparra (Investigation; Resources; Writing – review & editing)

Lionel Touffet (Investigation; Resources; Writing – review & editing)

M. Dylan Bould (Conceptualization; Data curation; Formal analysis; Funding acquisition; Investigation; Methodology; Project administration; Resources; Supervision; Validation; Visualization; Writing – original draft; Writing – review & editing)